Switching Infrastructure in a data center

In my previous article, we have seen different switching architectures that define the logical aspects used in a data center cabling. There is no doubt that fat tree architecture(leaf spine architecture) is the most emerging one and 3-tier architecture has started to eliminate in many network environments. In a data center distribution of network equipment, the physical architecture is having a major role in defining the design, cost, and labor related to your networks. Don’t you want to know how these switches are physically fixed and how can we make it in the best and efficient way? In this article, we will see how that idea of fat tree(leaf spine) architecture is physically implemented in the data center and its various types.

In a fat-tree(leaf spine) switch fabric, data center managers have faced multiple configuration options that require decisions regarding the application, cabling and where to place access switches that connect to servers. The leaf switches(access switches) can reside in traditional centralized network distribution areas, middle of row (MoR) positions or end of row (EoR) positions--all of which use a structured cabling to connect to the servers. Alternatively, they can be placed in a top of rack (ToR) position using point-to-point cabling within the cabinet for connecting to the servers. In switching infrastructure types the name implies the location of leaf switches. Let us see each of these in detail.

Special Notes,

I would strongly suggest you to understand some of the below terms which will help you to understand all the switching infrastructures concept easily based on the below pictures.

MDA – MAIN DISTRIBUTION AREA

The space where core layer equipment such as routers, LAN/SAN switches, PBXs, and Muxes are located.

HDA – HORIZONTAL DISTRIBUTION AREA

The space where aggregation layer equipment such as LAN/SAN/KVM switches are located.

EDA – EQUIPMENT DISTRIBUTION AREA

The space where access layer equipment such as LAN/SAN/KVM switches and servers are located.

ZDA – ZONE DISTRIBUTION AREA

The space where a consolidation point or other intermediate connection point is located.

Have an overall idea of switching architecture and understand leaf spine structure as this is the backbone of the below designs.

Centralized

Traditional cabling architecture where network switching is centralized in a row of HDA cabinets and racks. But I can definitely say that this switching infrastructure is no longer used in current data center operations unless it’s existed before ages.

Note: The rack with VIOLET(HDA) color is the position of leaf switches.

In this switching infrastructure model, all the connections from all the racks will be pulled to a centralized rack which consists of access switches. Because of this itself, we may have multiple racks in the same raw that contains leaf switches.

Centralized advantages (Pro’s)

· Simple to design, implement and maintain

· Minimized network bottleneck

· Good port utilization

· Easy device management

Centralized disadvantages (Cons):

· Large number of cables

· Cable overlaps

· Difficulties in cable pathway design – Lack of scalability

End of Row (EoR)

In an EoR switching infrastructure, the leaf switches will be placed at the end of each row with a specific rack allocated for this. It uses structured cabling with passive patch panels to serve as the connection point between the access switches and servers. Patch panels that mirror the switch and server ports (cross-connect) at the EoR location connect to corresponding patch panels at the access switch and in server cabinets using permanent links. The connection between switch and server ports are made at the cross-connect via patch cords. Or the design can be without the use of patch panels too. Each server cabinet in the row would have twisted pair copper cabling (typically Category 5e or 6/6A) or fiber optics cables routed through overhead cable trays or below a raised floor to the “End of Row” switch.

Note: The portion with VIOLET(HDA) color on the picture is indicating the position of leaf switches(access switches).

In the EoR architecture, each server in individual racks are directly linked to an aggregation switch eliminating the use of individual switches in each rack. It reduces the number of network devices and improves the port utilization of the network. However, a large amount of cables is needed for horizontal cabling.

End of Row advantages (Pros)

· Fewer switches to manage. Potentially lower switch costs, lower maintenance costs.

· Fewer ports required in the aggregation.

· Racks connected at Layer 1. Fewer STP instances to manage (per row, rather than per rack).

· Longer life, high availability, modular platform for server access.

· Cost-effective compared to top of rack (ToR) design.

· Unique control plane per hundreds of ports (per modular switch), lower skill set required to replace a 48-port line card, versus replacing a 48-port switch.

End of Row disadvantages (Con’s)

· Requires an expensive, bulky, rigid, copper cabling infrastructure. Fraught with cable management challenges.

· More infrastructure required for patching and cable management.

· Long twisted pair copper cabling limits the adoption of lower power higher speed server I/O.

· Less flexible “per row” architecture. Platform upgrades/changes affect the entire row.

Middle of row (MoR)

In MoR switching infrastructure, the leaf switches will be placed in the middle of each row with a specific rack allocated for this. Middle of Row also having similar characteristics as EoR. The major difference is that since the switches are placed in the middle of the row, the cable length is reduced. Rest of the terminologies will remain the same as EoR.

Note: The portion with VIOLET(HDA) color on the picture is indicating the position of leaf switches(access switches).

Top of rack (ToR)

As the name indicates the access layer switches(leaf switches) will be at the top of individual racks. But the actual physical location does not necessarily need to be at the top of the rack. It can also be at the bottom or middle of the rack. However, after practical installation, engineers found that the top of the rack is better due to easier accessibility and cleaner cable management. This design may also sometimes be referred to as “In-Rack”.

Note: The portion with BLUE color on the picture is indicating the position of leaf switches(access switches). HDA is not used in this configuration.

Each cabinet will have its own leaf switches and the number of these leaf switches will depend on the number of devices in the rack, number of ports required to connect and future expansion also. Each server or network device in this cabinet will be directly connected to these leaf switches and only very few interlinking cables need to be pulled out of the rack to spine switches. Because of this most of the cables will stay within the rack itself and overhead cables will be reduced drastically. Cable management is also easier with less cables involved. Technicians can also add or remove cables in a simpler way. The ethernet top of rack switch is typically low profile (1RU-2RU in size) and fixed configuration. Usually, in a data center environment, we may see two or more ToR switches in a rack to provide redundancy.

Top of Rack advantages (Pro’s)

· Copper stays “In Rack”. No large copper cabling infrastructure required.

· Lower cabling costs. Less infrastructure dedicated to cabling and patching.

· Efficient use of floor space.

· Cleaner cable management.

· Excellent scalability.

· Modular and flexible “per rack” architecture. Easy “per rack” upgrades/changes.

· Future proofed fiber infrastructure, sustaining transitions to 40G and 100G.

Top of Rack disadvantages (Con’s)

· More switches to manage. More ports required in the aggregation.

· More Layer 2 server-to-server traffic in the aggregation.

· Higher network equipment costs since we need to have more number of switches.

· Racks connected at Layer 2. More STP instances to manage.

· Unique control plane per 48-ports (per switch), higher skill set needed for switch replacement.

· Creation of hotspots due to higher density power footprint

Summary

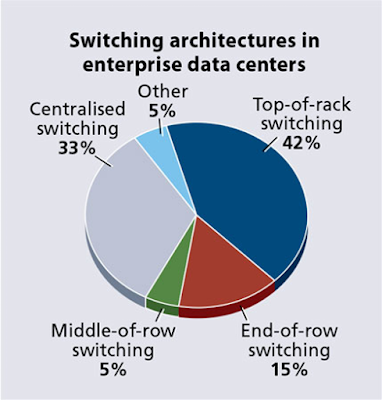

In a fat-tree switch fabric, access switches that connect to servers and storage equipment in rows can be located at the MoR or EoR position to serve the equipment in that row, or they can be located in a separate dedicated area to serve multiple rows of cabinets. MoR and EoR configurations, which function in the same manner, are popular for data center environments where each row of cabinets is dedicated to a specific purpose, and growth is accomplished on a row-by-row basis. It’s also undoubtfully we can say that ToR infrastructure also largely adopted by data centers due to its various advantages. When BSRIA surveyed enterprise data center operators, it found that 42 percent use a top-of-rack switching architecture. End-of-row and middle-of-row switching combined account for 20 percent.

There is no single ideal configuration for every data center, and real-world implementation of newer leaf-spine switch fabric architectures warrants CIOs, data center professionals and IT managers taking a closer look at the pros and cons of each option based on their specific needs. Pros and cons exist for each model and depend largely on an individual data center's needs. So ultimately It’s up to you to decide which is best for your environment.

Knowledge Credits: www.anixter.com,www.cablinginstall.com

Have a comment or points to be reviewed? Let us grow together. Feel free to comment.

The "best network switch" is an essential IT component for seamless data flow. It optimizes network performance, supports various data rates, and ensures reliability. Features like VLAN support, PoE, and scalability make it stand out. Choosing the right switch is crucial for efficient data management and connectivity in any network setup.

ReplyDelete